TL;DR: The first 90 days follow a predictable pattern: Week 1 requires daily attention, weeks 2-4 need occasional check-ins, and by month three the agent fades into background infrastructure you rarely think about. Most issues surface in the first two weeks. Expect 3-5 edge cases that need adjustment, one integration hiccup, and a brief team adoption curve. This article walks through each phase honestly so you know what's coming.

Why the First 90 Days Matter

You signed the contract. We built the agent. Testing looked good. Now it's live.

This is where some vendors disappear. "Call us if you have problems." That's not how this works.

The first 90 days determine whether the agent becomes trusted infrastructure or an expensive experiment your team routes around. Getting this period right matters more than getting the build perfect. A good agent with poor adoption fails. A decent agent with proper support succeeds.

Here's what actually happens.

Week 1: The Attention-Intensive Phase

Your time investment: ~30 minutes daily

The agent is live. Real transactions are flowing through it. Everyone's watching.

What You'll Experience

Monday-Tuesday: Cautious optimism. The agent handles routine cases well. Your team keeps checking outputs, comparing them to what they would have done. Mostly matches. A few "huh, interesting" moments.

Wednesday-Thursday: First edge case appears. Something the agent handles differently than expected. Not wrong, exactly, but not how Sarah would have done it. Is this a problem? Probably not, but we investigate anyway.

Friday: The weekly review call. We look at every decision the agent made. Discuss the edge case. Decide whether to adjust logic or accept the variation. Usually it's fine.

Common Week 1 Issues

Edge cases we didn't anticipate: Testing catches most, not all. Real-world data is messier. A customer submits something weird. A field contains unexpected formatting. The agent makes a reasonable choice, but not the optimal one.

Team double-checking everything: Normal and healthy. Your people don't trust the agent yet. They verify outputs manually. This defeats the time-saving purpose temporarily, but it's how trust gets built.

Integration timing quirks: Systems don't always sync perfectly. A record updates in your CRM but the agent doesn't see it for 30 seconds. Usually irrelevant, occasionally causes confusion.

What We're Doing

Monitoring transactions for errors

Reviewing decision logs daily

Responding to questions within hours

Documenting any issues for pattern analysis

Adjusting thresholds and logic as needed

What You're Doing

Flagging anything that looks off

Encouraging team to report concerns (no matter how small)

Resisting the urge to override the agent preemptively

Attending the Friday review call

Weeks 2-4: The Calibration Phase

Your time investment: 15-30 minutes every few days

The initial novelty wears off. The agent becomes part of the workflow.

What You'll Experience

Week 2: Fewer surprises. The obvious edge cases got fixed in week 1. Team starts trusting routine decisions. They still spot-check, but less frantically. Someone says "the agent handled that one well" without being prompted.

Week 3: First time you realize you forgot to check the agent dashboard for two days. Nothing broke. The backlog that used to pile up on Monday mornings is... gone. Your ops person mentions they left early yesterday because everything was caught up.

Week 4: The agent makes a decision you wouldn't have made. But looking at it, the agent's choice was actually better. It followed the rules more consistently than your team was. This is either reassuring or unsettling, depending on your perspective.

Common Weeks 2-4 Issues

Over-reliance too fast: Some team members stop paying attention entirely. This is premature. The agent needs oversight during calibration.

Under-reliance from skeptics: Other team members keep doing work manually "just in case." This creates duplicate effort and confusion about what's actually handled.

Process drift discovery: The agent follows the documented process exactly. Turns out your team had drifted from that process in small ways. Sometimes the drift was smart; sometimes it was sloppy. Either way, it surfaces now.

Volume variations: Your "typical" volume has peaks and valleys you didn't mention. The agent handles them fine, but API costs might vary more than estimated.

What We're Doing

Weekly review calls (shorter now, 15-20 minutes)

Monitoring for patterns, not individual transactions

Refining decision logic based on accumulated data

Documenting institutional knowledge the agent is learning

Adjusting any cost estimates based on actual usage

What You're Doing

Enforcing consistent team behavior (everyone uses the agent, or no one does)

Noting any process improvements the agent revealed

Starting to track time savings and error reduction

Deciding what to do with recovered capacity

Month 2: The Normalization Phase

Your time investment: 30 minutes weekly

The agent is no longer new. It's just how things work now.

What You'll Experience

Week 5-6: You stop thinking about the agent as a separate thing. It's infrastructure, like your phone system or accounting software. When someone asks "how do you handle [process]?" you say "the agent does it" without elaboration.

Week 7-8: First time a team member references what the agent taught them. "I noticed the agent always checks X before Y. That's actually smarter than how I was doing it." The agent is now a process documentation source.

The Metrics Start Showing

By now, you have enough data to measure impact:

Time saved: Hours per week freed up. Usually 15-30 for the specific process.

Error reduction: Mistakes caught or prevented. Track before/after.

Speed improvement: Time from trigger to completion. Often 60-80% faster.

Consistency: Variation in how similar cases are handled. Should approach zero.

We'll review these together. If the numbers don't match projections, we figure out why.

Common Month 2 Issues

Scope creep requests: "Can the agent also handle X?" Often yes, but that's a separate project. We'll quote it if you want.

Integration with adjacent processes: The agent's process is smooth now. The processes that feed into it or receive from it become the new bottleneck. Good problem to have, but visible.

Reporting gaps: You want data the agent doesn't currently capture. Usually easy to add, sometimes requires logic changes.

What We're Doing

Bi-weekly check-ins (unless you need more)

Performance reporting against baseline

Identifying optimization opportunities

Planning any expansion or improvement

What You're Doing

Redirecting saved time to higher-value work

Documenting ROI for internal stakeholders

Considering what else could benefit from automation

Providing feedback on what's working and what isn't

Month 3: The Background Phase

Your time investment: 30 minutes monthly

The agent is invisible in the best way. It just works.

What You'll Experience

You forget the agent exists until someone mentions it. New employees learn "the system handles that" without knowing there was ever a manual process. The dashboard exists, but you check it monthly, not daily.

When something unusual happens, you trust the agent to either handle it correctly or escalate appropriately. You've stopped second-guessing.

The 90-Day Review

We schedule a full review:

Performance metrics: Did the agent deliver projected ROI?

Reliability stats: Uptime, error rates, escalation frequency

Cost actuals: How do real operating costs compare to estimates?

Team feedback: What do the people working with the agent think?

Improvement opportunities: What would make it even better?

This review determines the path forward. Most clients continue as-is with standard support. Some want additions. A few realize they need a second agent for a different process.

What Happens After 90 Days

The agent transitions to steady-state operations:

Monthly performance reports

Proactive monitoring (we catch issues before you notice)

Minor adjustments included in operating costs

Quarterly business reviews (optional but recommended)

You'll hear from us when something needs attention. Otherwise, the agent runs quietly in the background, doing its job.

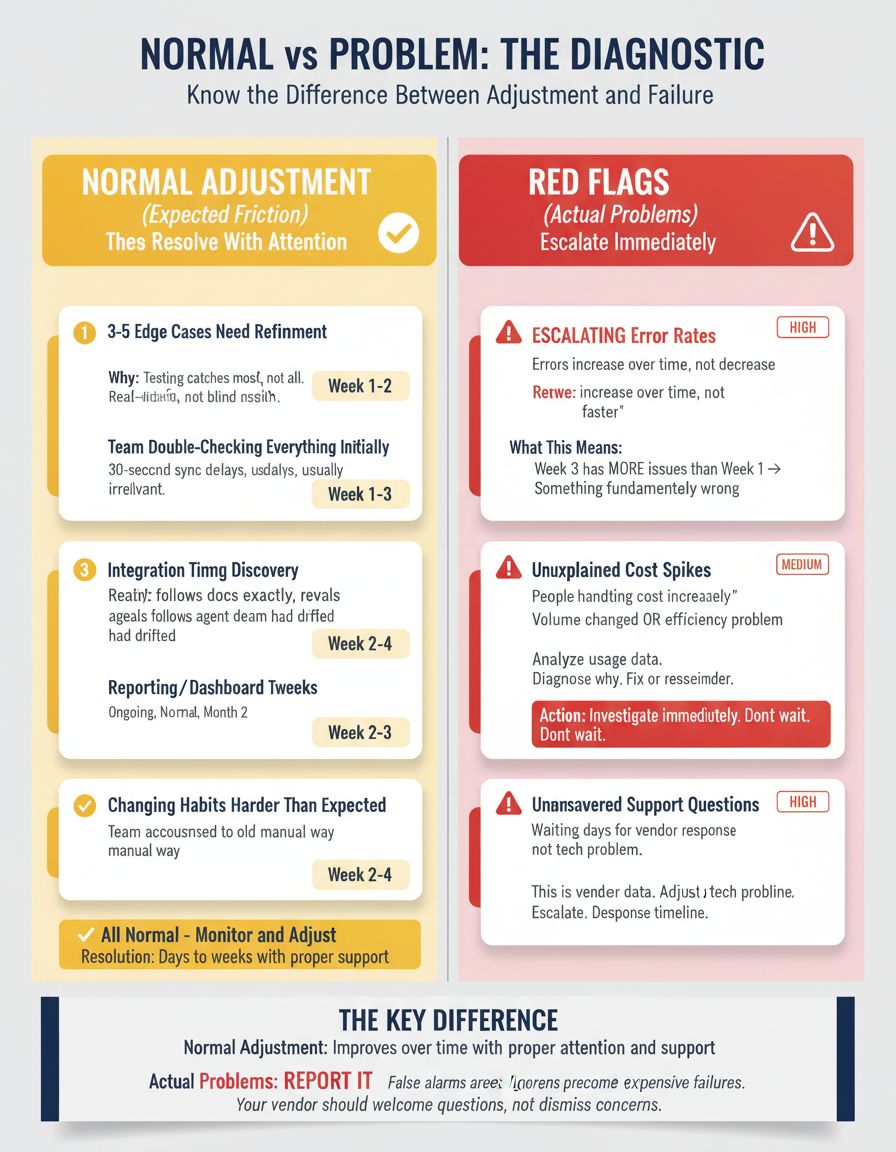

The Realistic Adjustment Curve

Let me be direct about what to expect:

Things that will go smoothly:

Routine transactions (80-90% of volume)

Clear-cut decisions with obvious right answers

Integrations with well-documented systems

Processes that were already well-defined

Things that will need adjustment:

3-5 edge cases requiring logic refinement

At least one integration timing issue

Team adoption inconsistencies

Reporting or dashboard tweaks

Things that might be harder than expected:

Changing team habits around the old process

Accepting that the agent's way might be better than your way

Resisting the urge to over-customize

Trusting the system during the first few weeks

None of these are failures. They're the normal path from "new tool" to "trusted infrastructure." The question isn't whether you'll hit bumps. You will. The question is whether you have support to navigate them.

Red Flags That Something's Actually Wrong

Normal adjustment looks like minor friction that resolves with attention. Actual problems look different:

Escalating error rates: Errors should decrease over time, not increase. If week 3 has more issues than week 1, something's wrong.

Team routing around the agent: If people start handling things manually "because it's faster," the agent isn't delivering value. We need to investigate.

Unexplained cost spikes: Operating costs should be predictable. Sudden increases mean something changed—either your volume or our efficiency.

Unanswered questions: If you're waiting days for responses to support requests, that's a vendor problem, not a technology problem.

If you see these patterns, escalate. Don't assume it'll work itself out.

What Good Support Looks Like

You shouldn't have to wonder whether you're getting adequate support. Here's what to expect:

Week 1:

Daily check-ins (brief, 5-10 minutes)

Same-day response to any issues

End-of-week full review

Weeks 2-4:

Weekly check-in calls

Response within 4 hours during business hours

Proactive notification of any anomalies

Month 2-3:

Bi-weekly then monthly check-ins

Response within 24 hours (urgent issues faster)

Monthly performance reporting

Ongoing:

Monthly reports

Response within 48 hours for non-urgent items

Quarterly business reviews available

If your vendor isn't providing this level of engagement, especially in the critical first month, that's a problem.

Preparing for Day One

If you're considering an agent, here's how to set yourself up for a smooth first 90 days:

Before go-live:

Identify who will be the agent's "owner" on your team

Communicate to staff what's changing and when

Set expectations: this is a transition, not instant magic

Clear ~30 minutes daily for week 1 involvement

Know how to reach support and what response time to expect

Week 1 mindset:

Expect small issues. They're normal.

Report everything, even things that seem minor.

Resist the urge to turn off the agent at the first hiccup.

Trust the process, but verify the outputs.

Ongoing:

Measure results against the projections that justified the investment

Redirect saved time to genuinely valuable work

Share feedback—positive and negative—so the agent improves

Think about what else could benefit from this approach

Ready to Start?

The first 90 days require effort. Not enormous effort - 2-3 hours in week 1, declining to almost nothing by month 3. But it's real involvement, not passive observation.

If you're ready for that, the payoff is infrastructure that handles work you used to do manually, consistently, without complaints, forever.

Not sure if you're ready? Use the Bottleneck Calculator to verify the investment makes sense. Read about what agents actually do if you want more background. Review the implementation process to understand what happens before go-live.

Ready to move forward? Book a Bottleneck Audit. We'll assess your process, estimate costs and timeline, and tell you honestly whether this is right for your situation.

Want to see how other businesses navigated their first 90 days? Download "Unstuck: 25 AI Agent Blueprints"—real implementation stories with actual timelines and results.

by SP, CEO - Connect on LinkedIn

for the AdAI Ed. Team