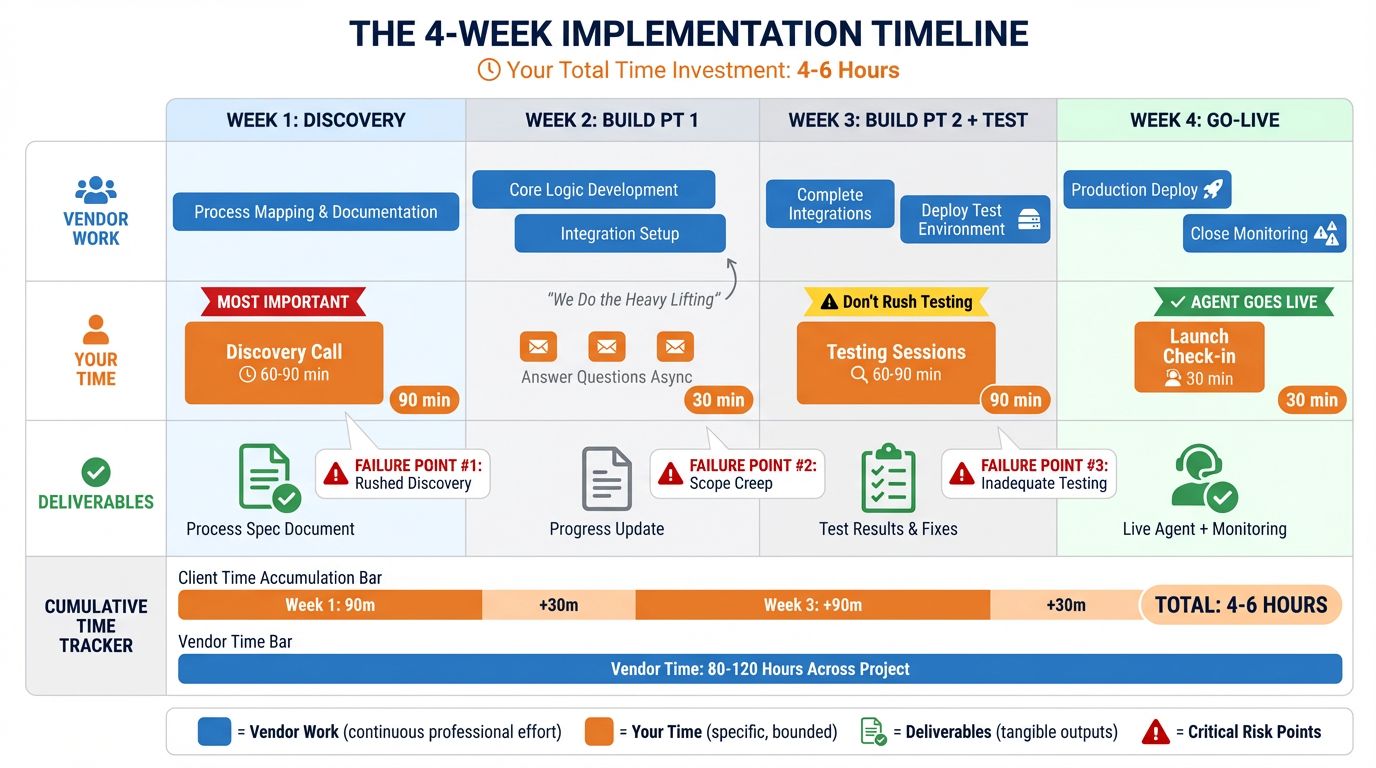

TL;DR: Building a custom AI agent takes about 4 weeks from kickoff to production. Your total time investment: 4-6 hours spread across that period. Week 1 is discovery (understanding your process), weeks 1-2 are build (we do the work), week 3 is testing (you verify it works), and week 4 is go-live with monitoring. The most common failure points aren't technical - they're unclear process documentation, scope creep mid-project, and inadequate testing. This article walks through each phase in detail so you know exactly what to expect before signing anything.

Why I'm Showing You the Whole Process

Most vendors keep implementation vague. "We'll work closely with your team." "Our proven methodology ensures success." "Leave the details to us."

That vagueness serves them, not you.

When you don't know what to expect, you can't evaluate whether a project is on track. You can't ask good questions. You can't spot problems early. You're dependent on the vendor to tell you everything is fine - and they always will, right up until it isn't.

So here's everything. The timeline. The meetings. The work. The places where projects go sideways and how to prevent it.

If another vendor won't give you this level of detail, ask yourself why.

The Five-Week Overview

Week | Phase | Your Time | What Happens |

|---|---|---|---|

1 | Discovery | 60-90 min | We learn your process in detail |

1-2 | Build | 30-60 min | We develop the agent, you answer questions |

3 | Testing | 60-90 min | You verify it works correctly |

4 | Go-Live | 30 min | Launch with monitoring, quick adjustments |

Total time from you: 4-6 hours over 4 weeks.

That's not a sales pitch number - it's the real average across projects. Some clients invest more because they want to be deeply involved. Some invest less because they trust the process and just want results. But 4-6 hours is the baseline for a successful implementation.

Let's break down each phase.

Week 1: Discovery

Purpose: Understand your process deeply enough to automate it correctly.

What happens:

We schedule a 60-90 minute call. This is the most important meeting of the entire project. I'll ask questions that might seem obvious or tedious. That's intentional.

Questions we'll cover:

Walk me through the process step by step. What triggers it? What's the first action?

Who does this work today? How long have they been doing it?

What information do they need to complete each step? Where does it come from?

What decisions get made along the way? What factors influence those decisions?

What are the edge cases? When does the normal process break down?

What systems are involved? Show me the screens if possible.

What does "done well" look like? How do you know when it's been handled correctly?

What goes wrong most often? What are the common mistakes?

What would you change about the current process if you could?

Why this matters:

The agent can only be as good as our understanding of your process. If we miss an edge case in discovery, we'll build something that fails when that edge case appears. If we misunderstand a decision point, the agent will make wrong choices.

I've seen projects fail because discovery was rushed. "Oh, we pretty much understand what you need." No. We need to understand exactly what you need, including the weird exceptions your best employee handles automatically without thinking about it.

Your preparation:

Before the call, think through your process. Better yet, document it roughly - even bullet points help. If the person who actually does the work isn't the decision-maker, have them join the call. The details live in their head.

Deliverable:

After discovery, we produce a Process Specification Document. This describes:

Every step the agent will handle

Decision logic for each choice point

Integration points with your systems

Edge cases and how they'll be handled

Success metrics we'll measure

What's explicitly out of scope

You review this document. Changes are easy at this stage, expensive later.

Common failure point #1: Rushing discovery

When clients say "let's just get started, we can figure out details later," projects go sideways. The details matter. If discovery feels tedious, that's actually a good sign - it means we're being thorough.

Weeks 1-2: Build

Purpose: Develop the agent based on the agreed specification.

What happens:

This is mostly our work, not yours. The development team builds the agent according to the Process Specification. We create the logic, set up integrations, configure decision rules, and build the interfaces.

Your involvement:

Minimal, but important. We'll have questions. Usually 2-4 async exchanges (email or Slack) about details that weren't clear in discovery.

Examples:

"When two customers have the same priority, how do you decide who gets handled first?"

"The API documentation for [your software] is unclear about X. Can you verify how this field actually works?"

"We found an edge case not covered in the spec. If [situation], what should happen?"

Quick responses keep the project moving. Multi-day delays on simple questions slow everything down.

What we're building:

The agent itself has several components:

Core logic: The decision-making engine that follows your process rules

Integrations: Connections to your existing systems (CRM, scheduling software, accounting, etc.)

Interface: How you'll interact with the agent (dashboard, notifications, approval workflows)

Monitoring: Logging and alerting so we can see what the agent is doing

Override controls: Ways for you to intervene, adjust, or stop the agent

Progress updates:

We send brief updates at the end of each week. What's done, what's in progress, any blockers. No jargon, no fluff. If something's behind schedule, we tell you why and what we're doing about it.

Common failure point #2: Scope creep

Mid-build, clients sometimes realize they want additional features. "While you're in there, could the agent also handle X?"

Sometimes that's fine - minor additions that don't change the core architecture. Often it's not - significant additions that require rethinking the design, extending the timeline, and increasing the cost.

We'll tell you which is which. But the answer is easier if we stick to the agreed scope for the initial build and plan additions as a Phase 2. First agent goes live, proves value, then we expand.

Week 3: Testing

Purpose: Verify the agent works correctly before it handles real work.

What happens:

We deploy the agent in a test environment connected to your real systems (but not handling real work). Then we run it through scenarios.

Testing approach:

Happy path testing: Normal cases that should work perfectly. We verify they do.

Edge case testing: The weird situations from discovery. Does the agent handle them correctly?

Failure testing: What happens when something goes wrong? API timeout. Missing data. Conflicting inputs. The agent should fail gracefully, not catastrophically.

Volume testing: If you expect 100 transactions daily, we test with 200. The agent should handle peaks without breaking.

Your involvement:

This is where you re-engage. We'll schedule 1-2 sessions (60-90 minutes total) where you:

Review test results

Run the agent through scenarios yourself

Verify decisions match what you'd expect

Identify anything that doesn't look right

Your team might be involved too—the people who do this work today are best positioned to spot when something's off.

What we're looking for:

Accuracy: Does the agent make correct decisions?

Completeness: Does it handle all the cases it should?

Usability: Is the interface clear? Can you find what you need?

Speed: Does it perform fast enough for your needs?

Reliability: Does it work consistently, or are there intermittent failures?

Fixing issues:

Testing always surfaces issues. That's the point. Small issues get fixed immediately. Larger issues might require design changes—we'll discuss the tradeoffs and decide together.

Common failure point #3: Inadequate testing

The pressure to go live is real. "It looks good enough, let's just launch."

Don't. Every hour spent testing saves ten hours fixing problems in production. An agent that fails on live customer data is far more expensive than an extra few days of thorough testing.

If we're pushing back on launching early, it's because we've seen what happens when testing gets cut short.

Week 4: Go-Live

Purpose: Deploy the agent into production with careful monitoring.

What happens:

The agent starts handling real work. But we don't just flip a switch and walk away.

Phased rollout:

For most projects, we recommend starting with a subset of work. Maybe 20% of transactions, or one category of tasks, or one geographic region. The agent proves itself on limited scope before taking over completely.

This catches issues that testing missed. Real-world data is messier than test data. Real users behave differently than we expect. Limited rollout contains the blast radius if something goes wrong.

Monitoring:

During the first week (and ongoing), we track:

Decision accuracy: Are agent decisions being overridden? How often? Why?

Processing time: How long does each transaction take?

Error rate: What percentage fail or require intervention?

User feedback: Is your team reporting issues?

We review metrics daily during launch week, then weekly after stabilization.

Your involvement:

Brief check-in call (30 minutes) after the first few days. How's it going? Any concerns? Anything behaving unexpectedly?

Your team should know how to flag issues. Clear escalation path: if something seems wrong, who do they tell?

Adjustments:

The first week always involves tweaks. Thresholds that need adjustment. Edge cases we didn't anticipate. User interface improvements based on actual usage.

This is normal. An agent isn't a finished product at launch - it's a working system that improves through feedback.

Full rollout:

Once the limited rollout proves stable (usually 1-2 weeks), we expand to full scope. Same monitoring, same adjustment process, just bigger scale.

After Go-Live: What Ongoing Support Looks Like

The build isn't the end. Agents need ongoing attention.

Monthly operating costs ($200-$1,000) cover:

Hosting and infrastructure: Servers, databases, 3rd party software, API costs

Monitoring and alerting: Automated systems watching for problems

Bug fixes: Issues that emerge get resolved

Minor adjustments: Small tweaks to decision logic as your process evolves

What's not included (requires separate scoping):

Major feature additions

Agent runs (cost per run, usually negligible but we will give you an estimation prior to build)

New integrations with additional systems

Significant logic changes

Performance optimization for dramatically increased volume

Typical support cadence:

First month: Weekly check-ins, high-touch monitoring

Months 2-3: Bi-weekly check-ins, standard monitoring

Ongoing: Monthly review, proactive maintenance

Most clients rarely need to think about the agent after the first month. It just runs. That's the goal.

The Three Places Projects Actually Fail

I've been honest about common failure points throughout. Let me consolidate them:

1. Unclear process definition

If you can't explain exactly how the work should be done, we can't automate it. "Use judgment" isn't automatable. "Apply these rules in this order with these exceptions" is.

Prevention: Spend the time in discovery. Document your process before the call if possible. Include the people who actually do the work.

2. Scope creep

Adding requirements mid-project extends timelines, increases costs, and often introduces bugs. The "one more thing" mentality kills projects.

Prevention: Agree on scope in writing. Maintain a "Phase 2" list for good ideas that emerge. Resist the urge to expand the first build.

3. Rushed testing

Pressure to launch before the agent is ready leads to production failures, lost trust, and expensive fixes.

Prevention: Budget adequate testing time. Trust the process. A week of testing is faster than a month of fixing production issues.

Notice what's not on this list? Technical failures. Integrations that don't work. AI that can't handle the complexity.

Those happen occasionally. But they're rare compared to process and project management failures. The technology works. The challenge is applying it correctly.

Questions to Ask Before Signing

Use these to evaluate any vendor, including us:

Timeline and process:

What's the week-by-week timeline?

How much of my time will this require?

What happens if we discover issues during testing?

How do you handle scope changes mid-project?

Deliverables:

What documentation will I receive?

Can I see decision logs showing what the agent does?

Who owns the agent if we part ways?

How do I make changes after launch?

Support:

What's included in ongoing support?

What response time can I expect for issues?

How do you handle bugs vs. feature requests?

What happens if the agent needs significant updates?

Risk:

What are the most common failure points?

What happens if the project fails? (Refund policy, etc.)

Can I get references from similar projects?

What would make you recommend we don't proceed?

That last question is important. A vendor willing to tell you "this isn't a good fit" is more trustworthy than one who says yes to everything.

Is Your Business Ready?

After reading this, you should have a clear sense of what implementation involves. Here's a quick readiness check:

Ready to proceed:

You can describe your target process in detail

The people who do this work can join discovery

You have 4-6 hours available over the next month

Your systems have APIs or integration capabilities

You know what success looks like

Need more preparation:

Your process isn't documented and varies by person

You're in the middle of other major changes

Key stakeholders aren't aligned on the project

You're not sure what problem you're solving

If you're ready, book a Bottleneck Audit and let's verify the project makes sense before committing. Thirty minutes, no cost, no pressure.

If you need preparation, that's fine. Use the Bottleneck Calculator to quantify the cost of your current process. Read about what AI agents actually do to make sure this is the right technology. Address any lingering concerns before moving forward.

The right time to build an agent is when you're ready—not before.

Want to see what finished agents look like? Download "Unstuck: 25 AI Agent Blueprints"—real examples showing the process, the build, and the results.

Ready to explore whether an agent fits your situation? Book a free Bottleneck Audit. We'll assess your process and give you an honest recommendation.

by SP, CEO - Connect on LinkedIn

for the AdAI Ed. Team